Gradient Descent

After choosing a cost function you need to minimize that function to slow the error. How we do that? with gradient descendent. There are different methods to optimize the cost function. These methods are called optimization methods and all of them use a modification of gradient descend to get the minima(There are others, but they use second order derivates like Newton’s method). Each has evolved from previous methods of gradient descent to solve problems that overcome previews methods. Regular gradient descent can`t work with big DataSets because there’s, not enough memory for the large computational memory that gradient descent takes. Gradient descendant is a method that helps us find the minima of a function. Now you are asking why do we have to use gradient methods instead of just derivate the function and use analytic math to find the minima? well for very complex network this is not an option for several reasons, like very high computational costs, and because in real life the equation that we get to find the minima are very complex. That is why we use gradient descendant to find the minima.

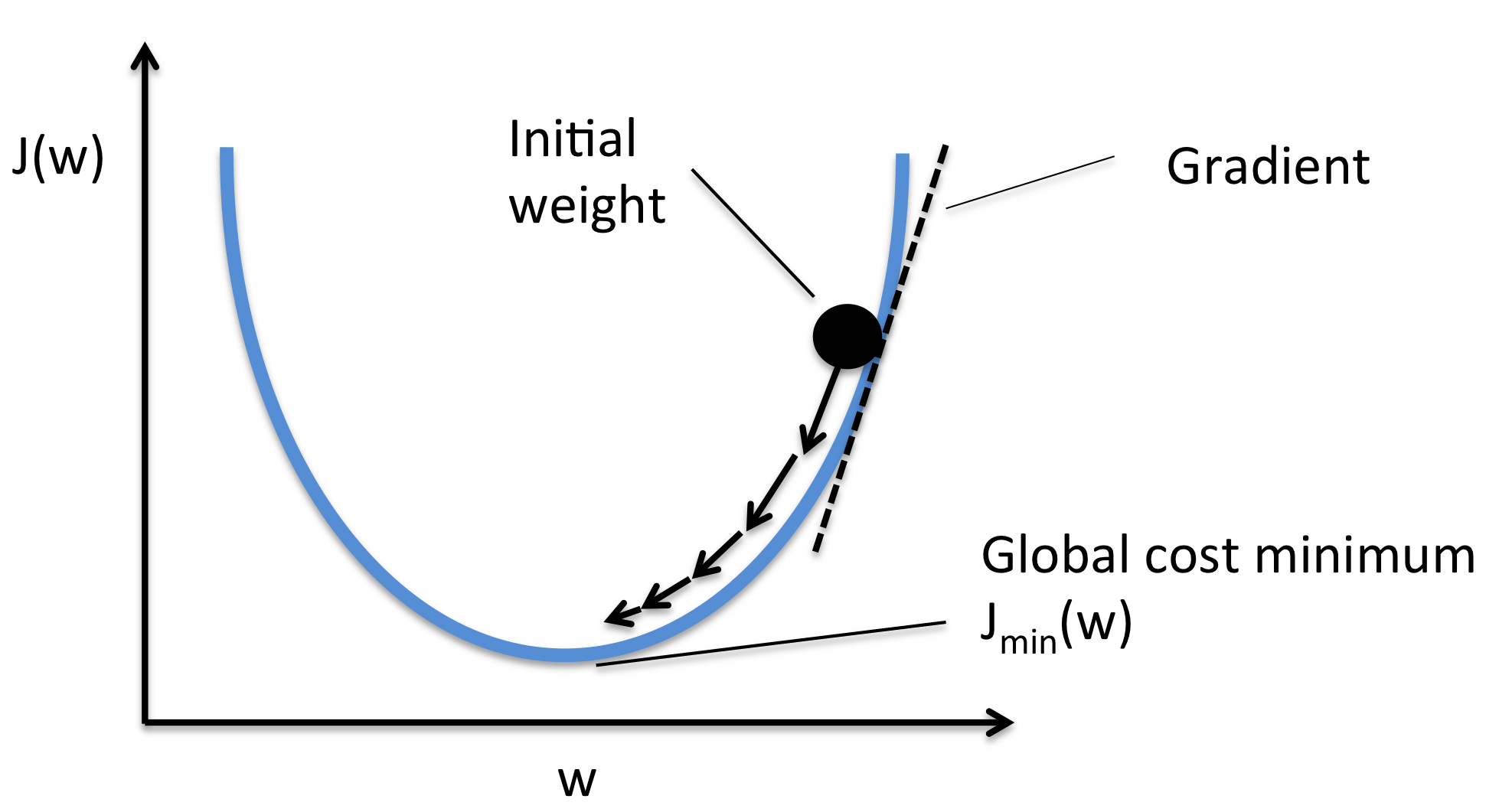

How Gradient Descent works

Gradient descent works by first choosing an arbitrary point and then taking small steps against the gradient by multiplying alpha (alpha is the small number for example 0.01) by the gradient until you get to the minima.