Gradient descent for linear regression

We already talk about linear regression which is a method used to find the relation between 2 variables. It will try to find a line that best fit all the points and with that line, we are going to be able to make predictions in a continuous set (regression predicts a value from a continuous set, for example, the price of a house depending on the size). How we are going to accomplish that? Well, we need to measure the error between each point to the line, find the slope and the b (b is the intersection with the y-axis) value to get the linear regression equation.

We talk about how we can find the best line using concepts as covariance, variance and the method of least squares.

Now we are going to find the line that best fits our data using Gradient Descent which is a method for optimization used in machine learning.

Gradient Descent

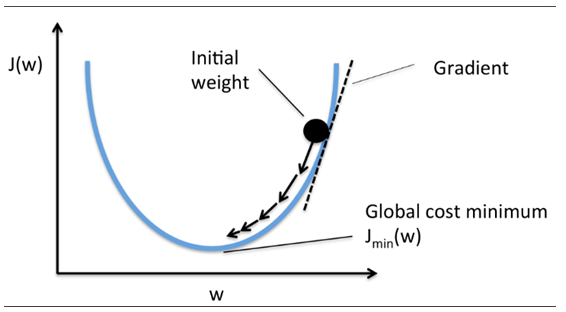

Gradient descent is a first order optimization method that means that it uses the first derivate to find local minima. In more detail, it uses partial derivate to find it.

The first derivate shows us the slope of the function. We need to move against of the direction of the slope to find the minima.(To get to the minima, we need to go against the gradient so we multiply the gradient by the negative value of alpha. Alpha is also called as the steeper size of the learning rate.) In order to find the minima, we select a small value called alpha. This alpha value is going to help us to find the minima by multiplying the gradient by the alpha value, taking us to the function`s minima.

We stop when the gradient changing is too small or when our gradient is sufficiently small.